In this tutorial, we will use an example to show you how to extract all links in a pdf file using python pymupdf library.

1.Install PyMuPDF

pip install PyMuPDF

2.Import library

import fitz # PyMuPDF

3.Extract all links

filename = "book.pdf"

with fitz.open(filename) as my_pdf_file:

#loop through every page

for page_number in range(1, len(my_pdf_file)+1):

# acess individual page

page = my_pdf_file[page_number-1]

for link in page.links():

#if the link is an extrenal link with http or https (URI)

if "uri" in link:

#access link url

url = link["uri"]

print(f'Link: "{url}" found on page number --> {page_number}')

#if the link is internal or file with no URI

else:

pass

In order to extract from pdf, we should:

(1) Get pdf page using my_pdf_file[page_number-1]

page = my_pdf_file[page_number-1]

(2) Using page.links() to get all links in the current page

for link in page.links()

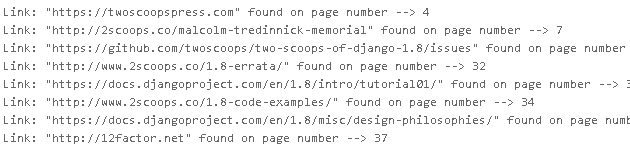

Run this code, you may get these links: